Mapping Template

Populate the mapping template

Now that you are in Kibana, you need to ensure that the data maps properly on ingestion. In Elasticsearch, you use a “template” to define index settings and the mappings. Click on this link to download the template so you can review it and then use the Developer Tools in the Kibana console to create a mapping - https://search-sa-log-solutions.s3-us-east-2.amazonaws.com/flowlogs/config/flowlogs_mapping.json.

Let us review what is in the template. First you have the index_patterns. What this directive does is looks for any index activities that have a name that matches “flowlogs-*”. If a request to write sto an index comes in and there is no prior mapping for that index, this template will create a new index with settings and mappings defined below. As your indexes rotate, and a new one creates for a new day, the template will match those requests and create the new index for you as long as the pattern is honored. In your case, daily indexes create for the solution that look like “flowlogs-YYYY-mm-DD”. This pattern matches and the index creates with the specifications outlined below.

PUT _template/flowlogs_template

{

"index_patterns":[

"flowlogs-*"

],

"settings":{

"number_of_shards":3,

"number_of_replicas":1

},

"mappings":{

"properties":{

"account-id":{

"type":"text",

"fields":{

"keyword":{

"type":"keyword",

"ignore_above":12

}

}

},

"action":{

"type":"long"

},

"bytes":{

"type":"long"

},

"dest-address":{

"type":"ip"

},

"dest-port":{

"type":"long"

},

"end-time":{

"type":"date"

},

"interface-id":{

"type":"text",

"fields":{

"keyword":{

"type":"keyword",

"ignore_above":32

}

}

},

"packets":{

"type":"long"

},

"protocol":{

"type":"long"

},

"source-address":{

"type":"ip"

},

"source-port":{

"type":"long"

},

"start-time":{

"type":"date"

},

"version":{

"type":"long"

}

}

}

}Copy the text that you downloaded into Dev Tools

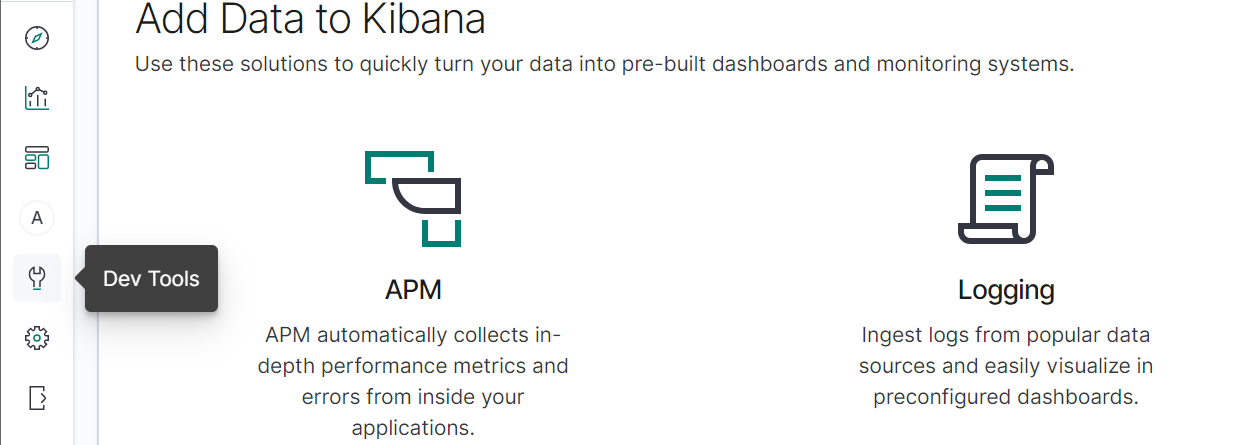

Navigate to the Dev Tools plugin.

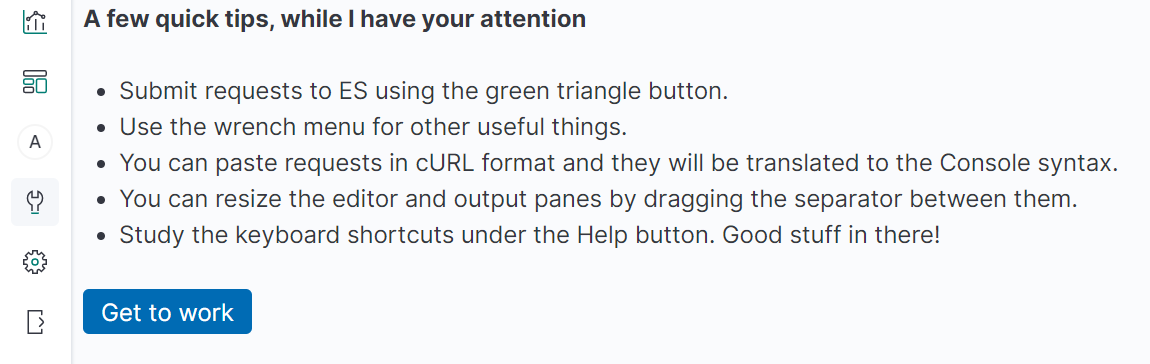

Ensure you see a similar screen, click “Get to work” and then copy the entire text for the template into the console.

Ensure you receive a conformation that says “acknowledge” : true.

Now you have the template setup. Let us go and setup the AWS Lambda configuration that will read events from the Amazon SQS queue that holds the Amazon S3 bucket notification messages and then processes them into the Amazon Elasticsearch Service.